Model Monitoring

Model Monitoring

Model Monitoring is an operational stage in the machine learning lifecycle that comes after model deployment. It entails monitoring your ML models for changes such as model degradation, data drift, and concept drift, and ensuring that your model is maintaining an acceptable level of performance. Our model monitoring tool is available to automate model evaluation and provide alerts when production models have degraded to the point where an expert should perform a detailed evaluation.

Katonic Model Monitoring System provides

- Built-in Model Monitoring

- Automated Retraining

How to Access the Monitoring System

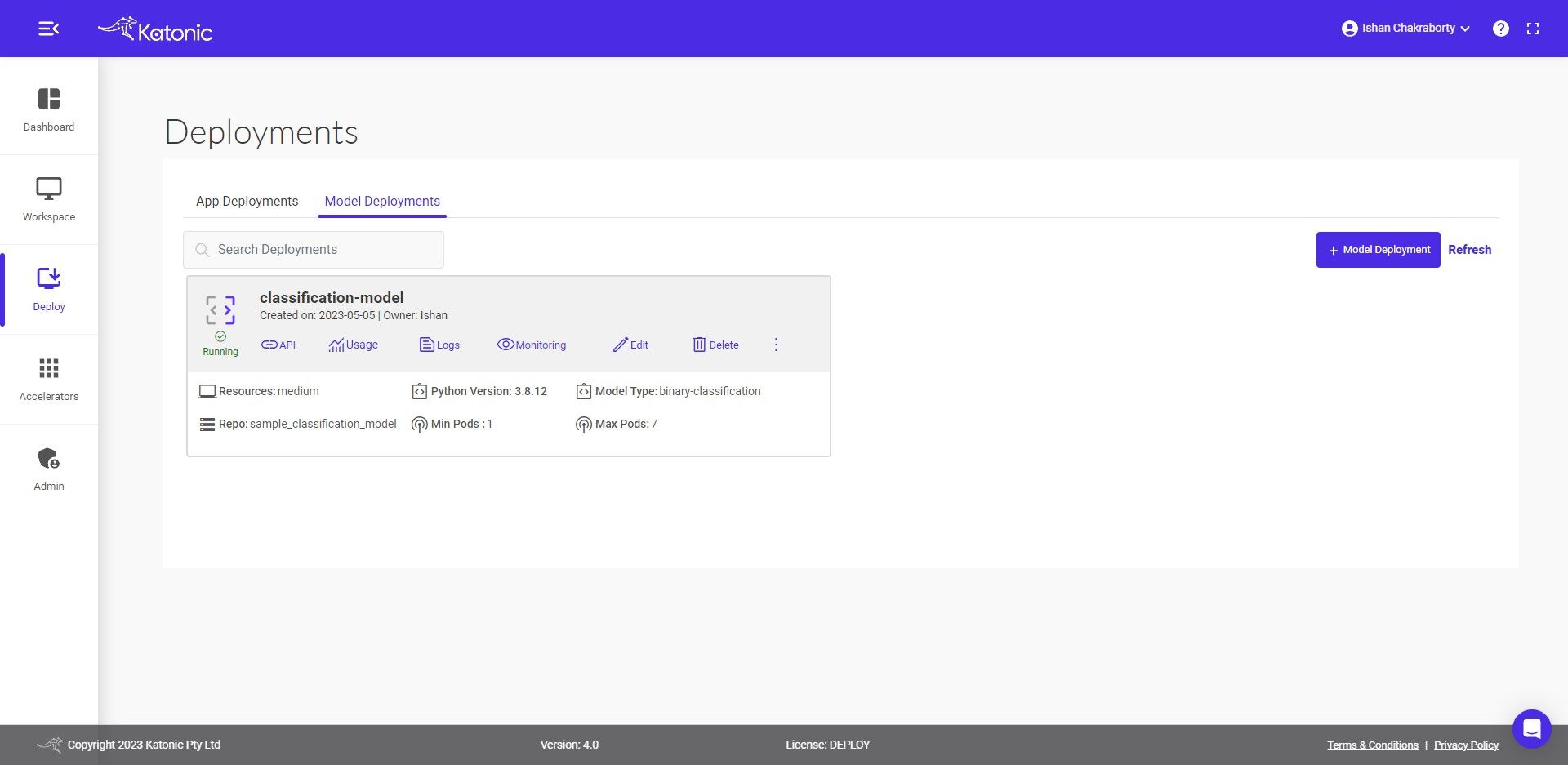

- Navigate to deploy section from sidebar on the platform.

2.Click on ‘Monitor’ to monitor the effectiveness and efficiency of your deployed model.

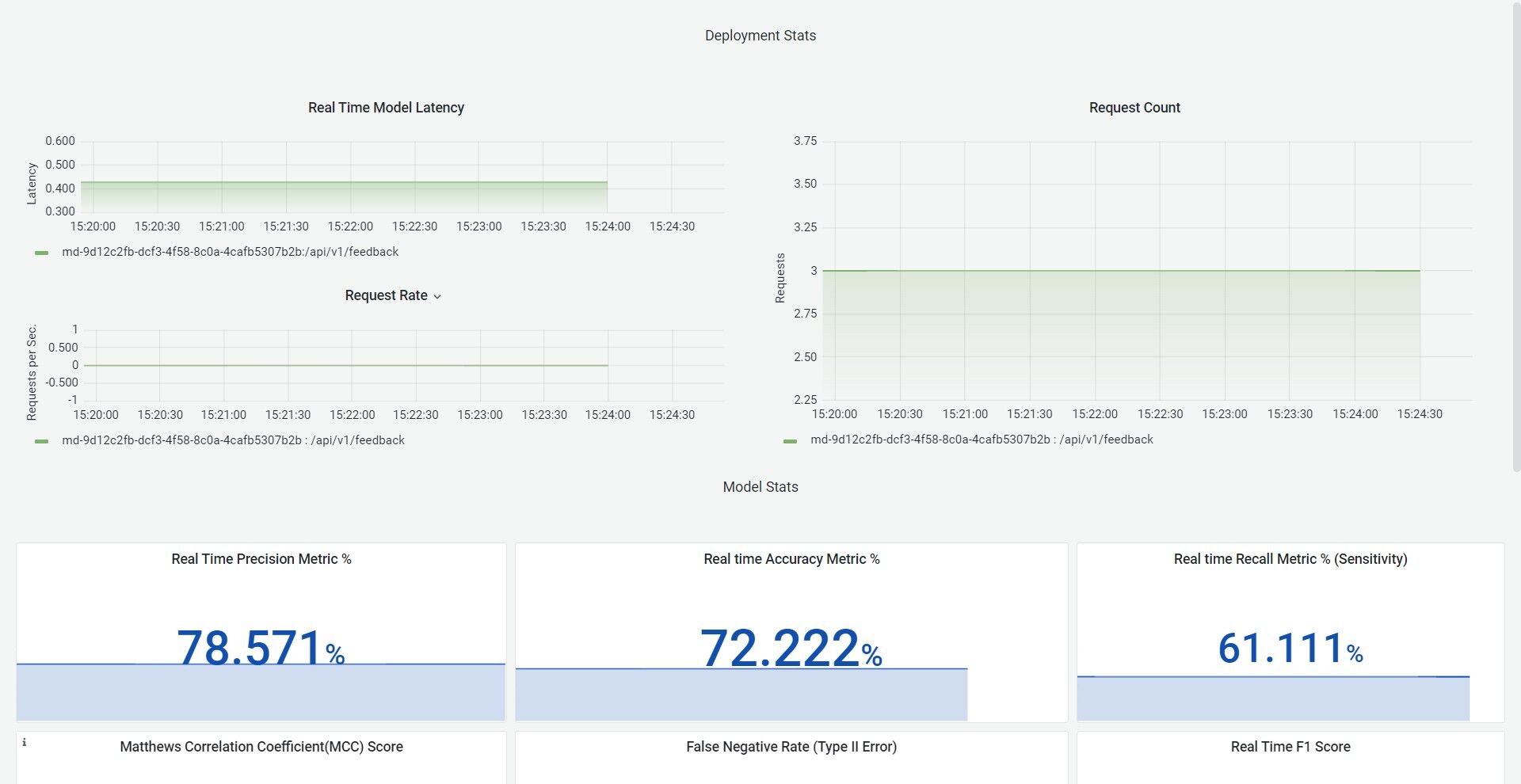

- In Katonic Model Monitoring system, that opens up in a new tab, you can get real-time insights and alerts on model performance and data characteristics. You can also debug anomalies and initiate trigger to execute ML production pipelines to retrain the models with new data, depending on your usecase.

In the Dashboard, we provide different types of metrices: operational and model based on real time feedback

Operational (for all types of model)

- Real time request latency

- Real time request rate

- Real time request count

Model base (for all types of model)

These are build specific to model types. If the model doesn't fit the existing categories, one can always choose Others in the Model Type field.