Katonic Model Registry Deployment

Model deployment is the process of putting machine learning models into production. This makes the model’s predictions available to users, developers or systems, so they can make business decisions based on data, interact with their application (like recognize a face in an image) and so on.

If you want your model to serve another application, you will want to serve it in the form of an API endpoint. Katonic Model APIs are scalable REST APIs that can create an endpoint from any function in Python.

The Katonic Model APIs are commonly used when you need an API to query your model in near real-time. Model APIs are built as docker images and deployed on Katonic.

You can export the model images to your external container registry and deploy them in any other hosting environment outside of Katonic using your custom CI/CD pipeline. Katonic supports REST APIs that enable you to programmatically build new model images on Katonic and export them to your external container registry.

To deploy your model stored in Katonic Model Registry with Katonic Model API deployment

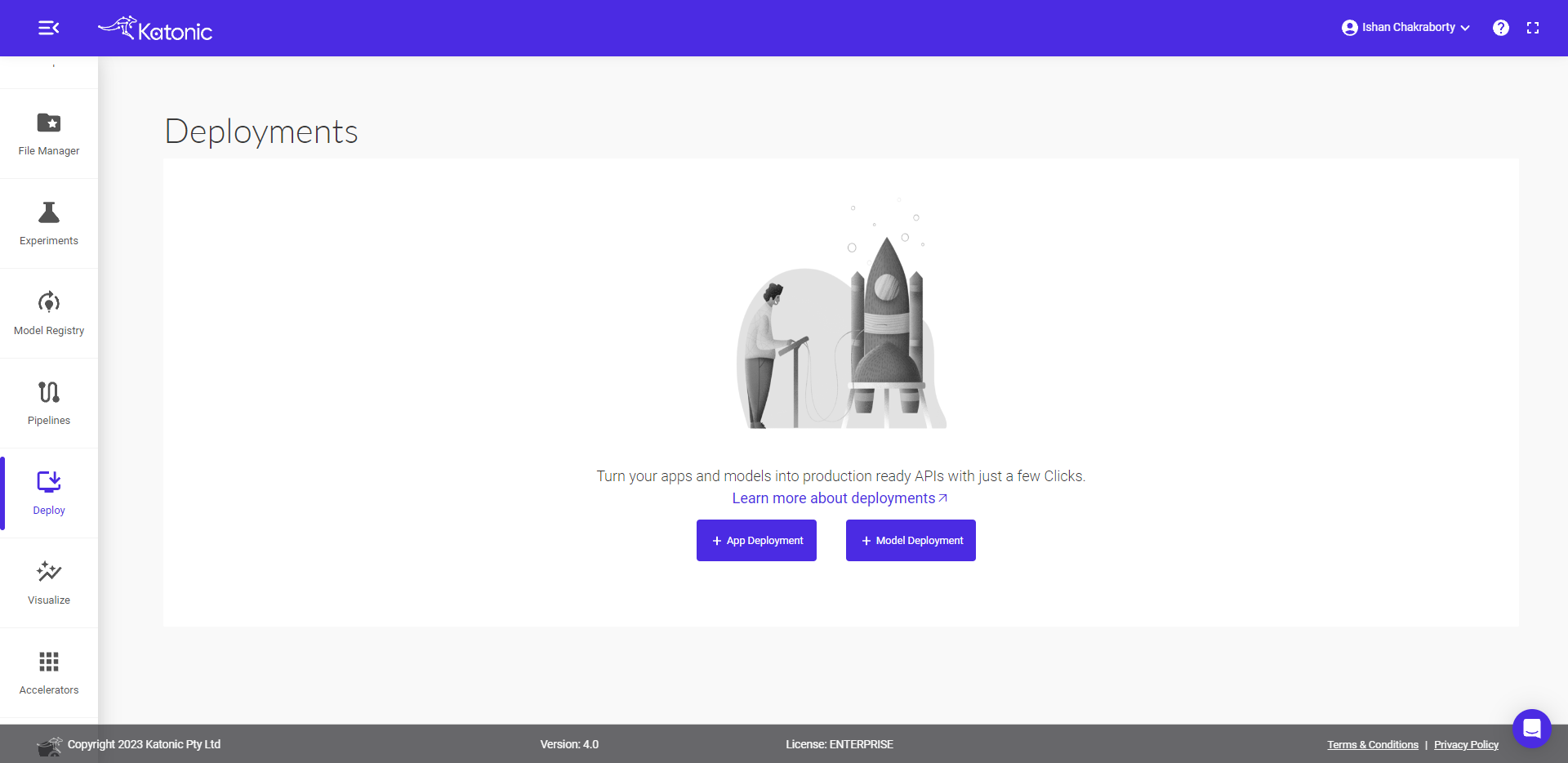

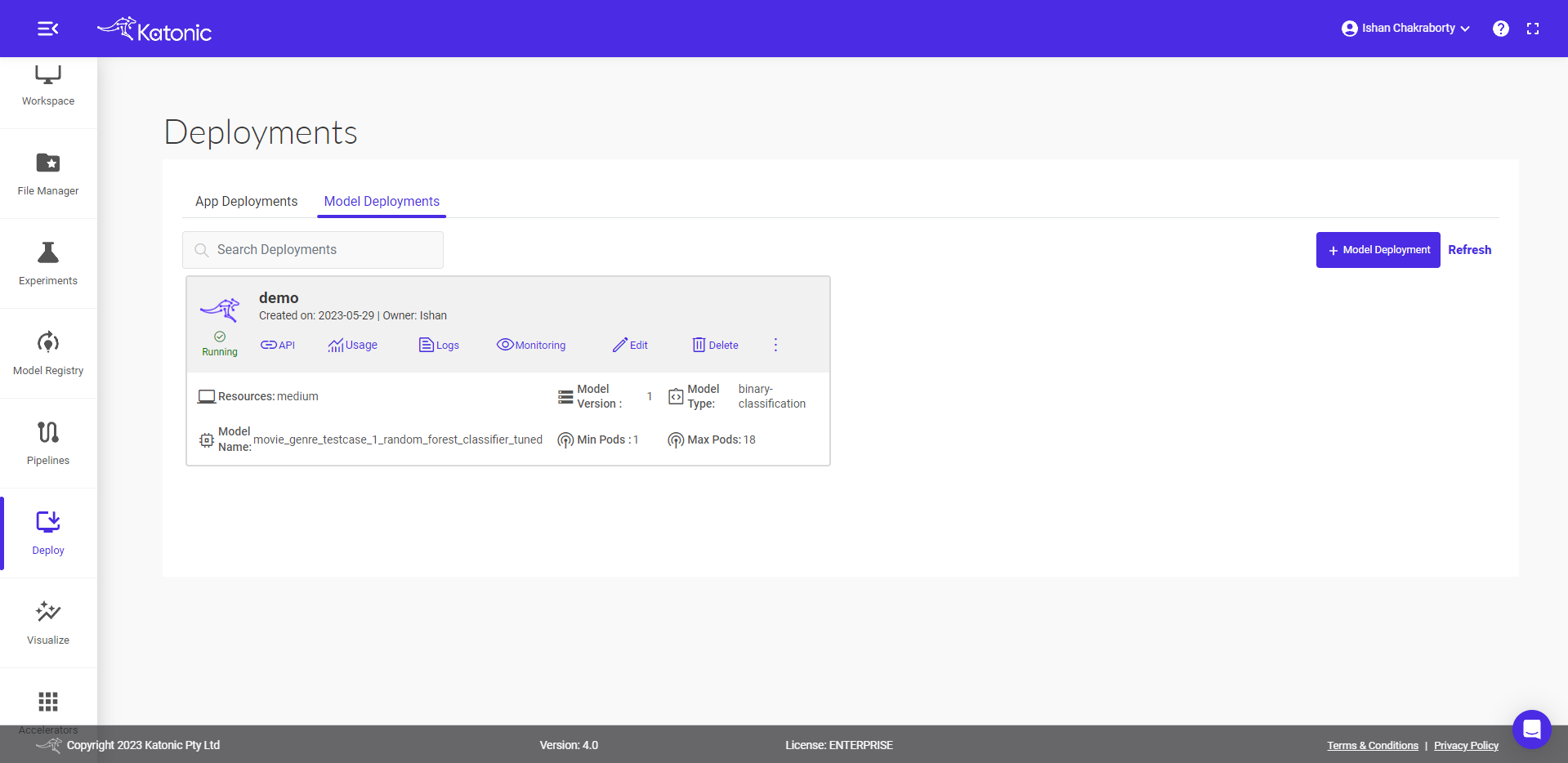

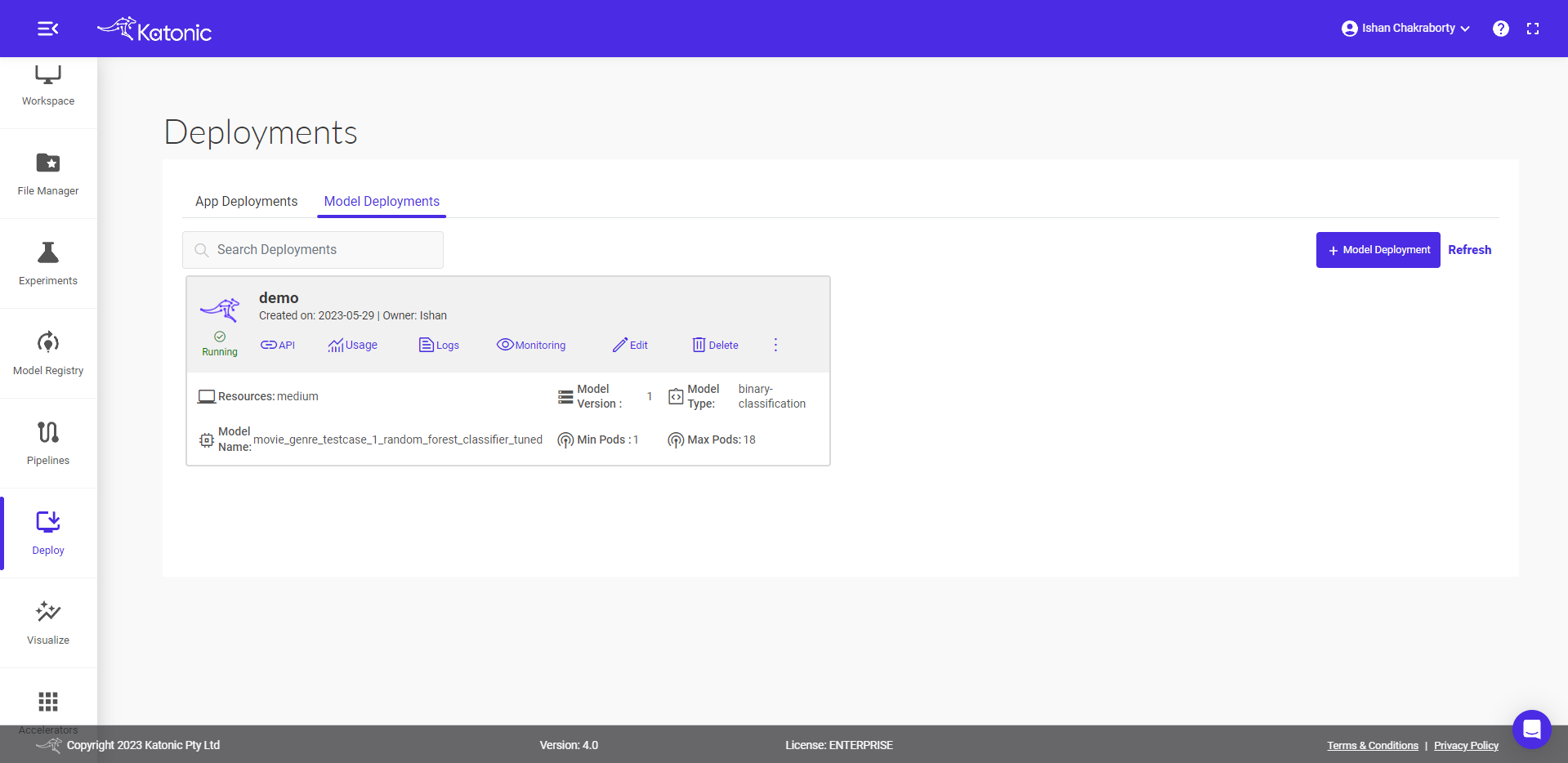

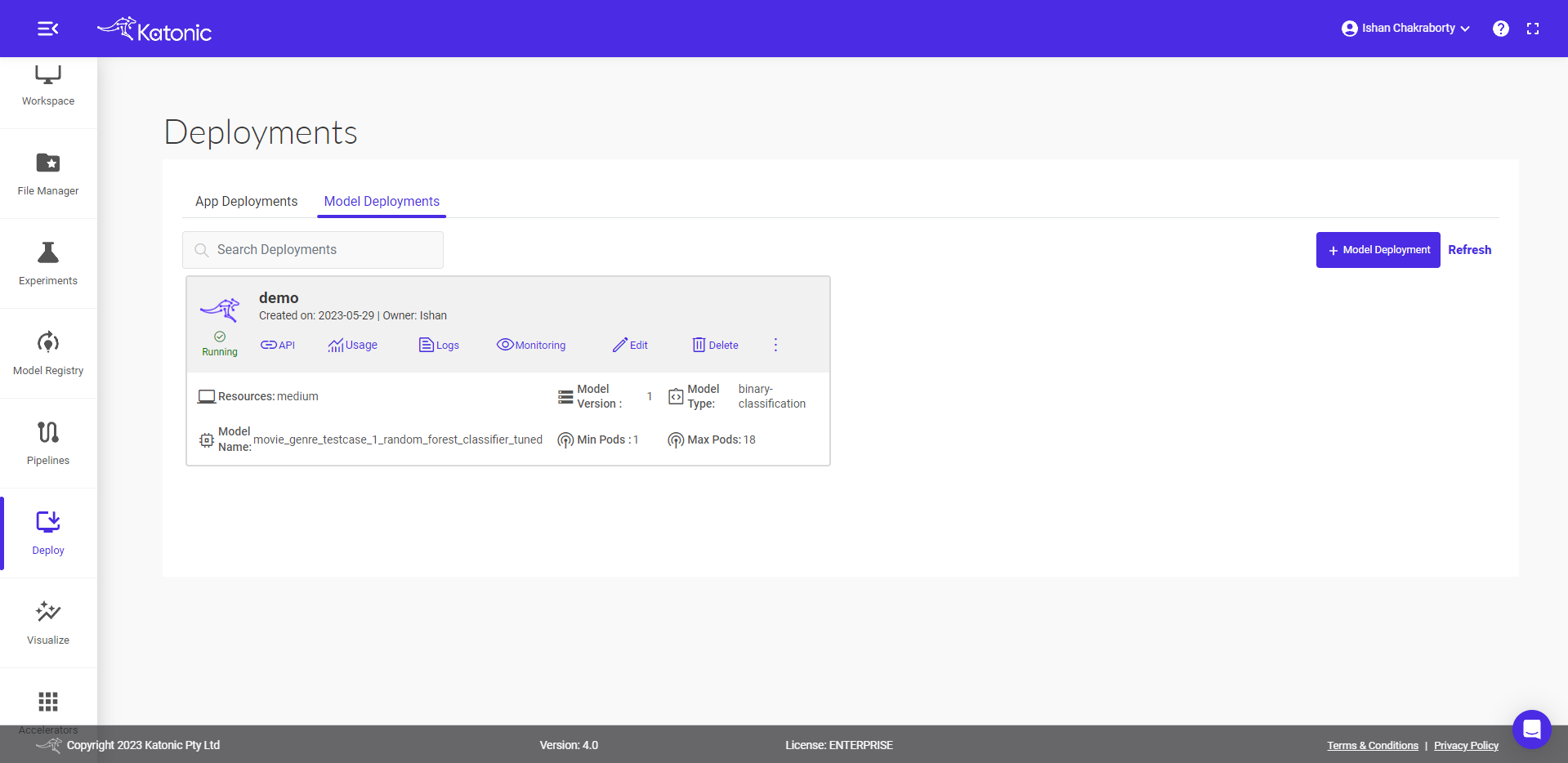

Navigate to deploy section from sidebar on the platform.

Click on ‘Model Deployment’.

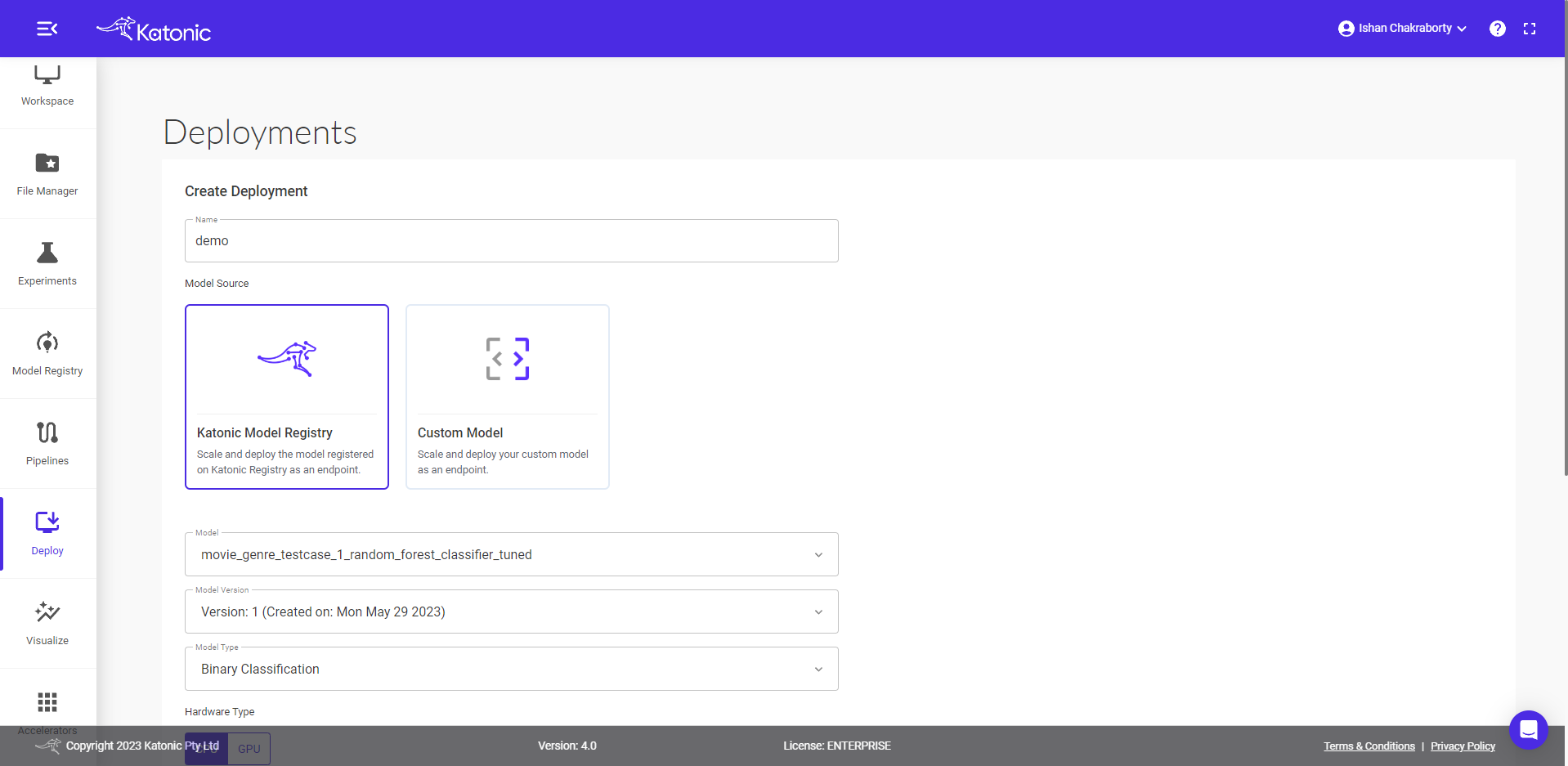

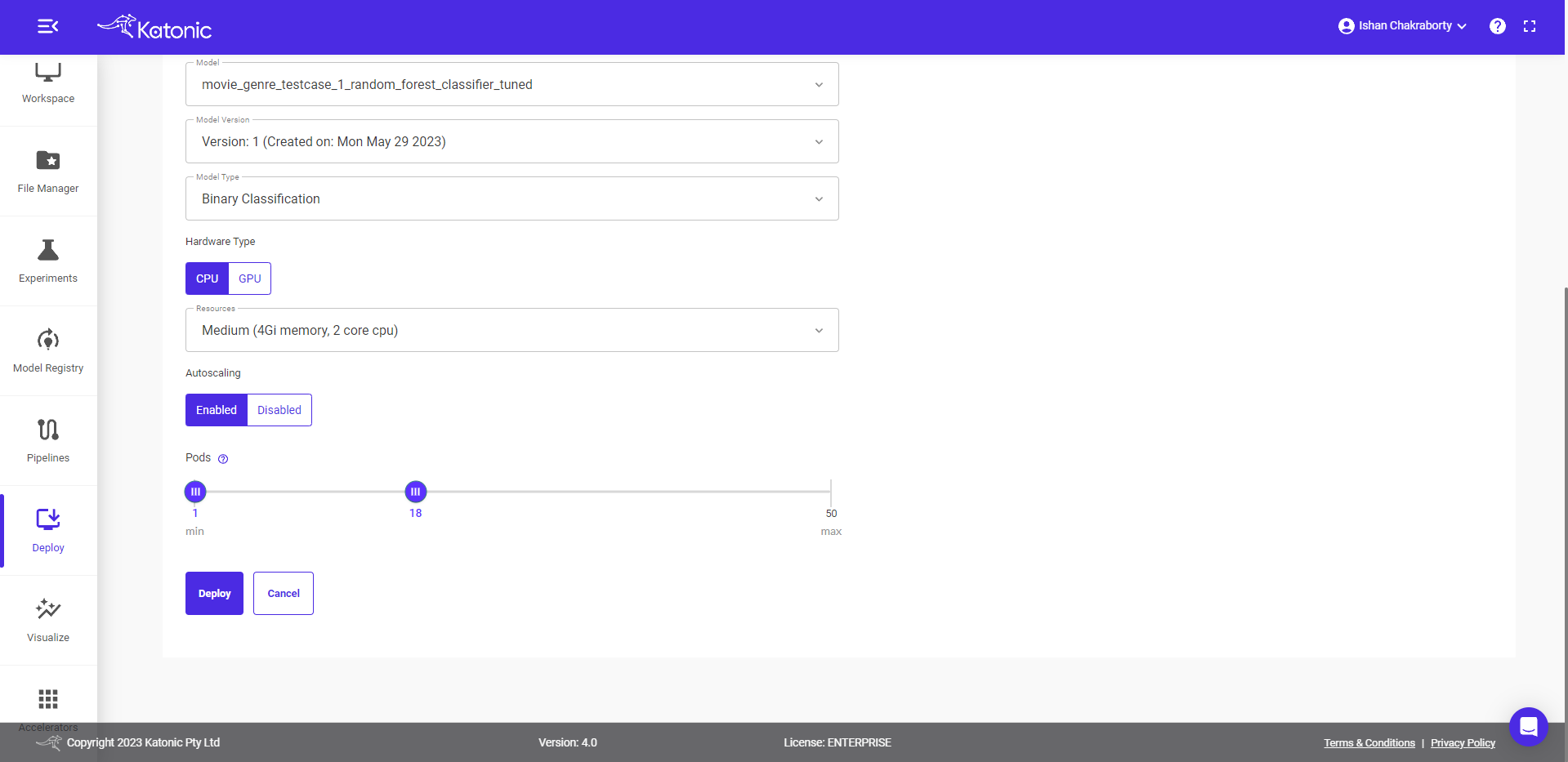

Fill in your model details in the dialog box.

Give a name to your deployment and proceed to the next field.

Select Model Source as Katonic Model Registry.

Select a model from the dropdown list.

Select a model version from the dropdown.

Select the appropriate model type.

Select Hardware type as either CPU or GPU.

Select the resources required for your model deployment from the dropdown.

Select appropriate pod ranges for autoscaling the mode if enabled

Click on Deploy.

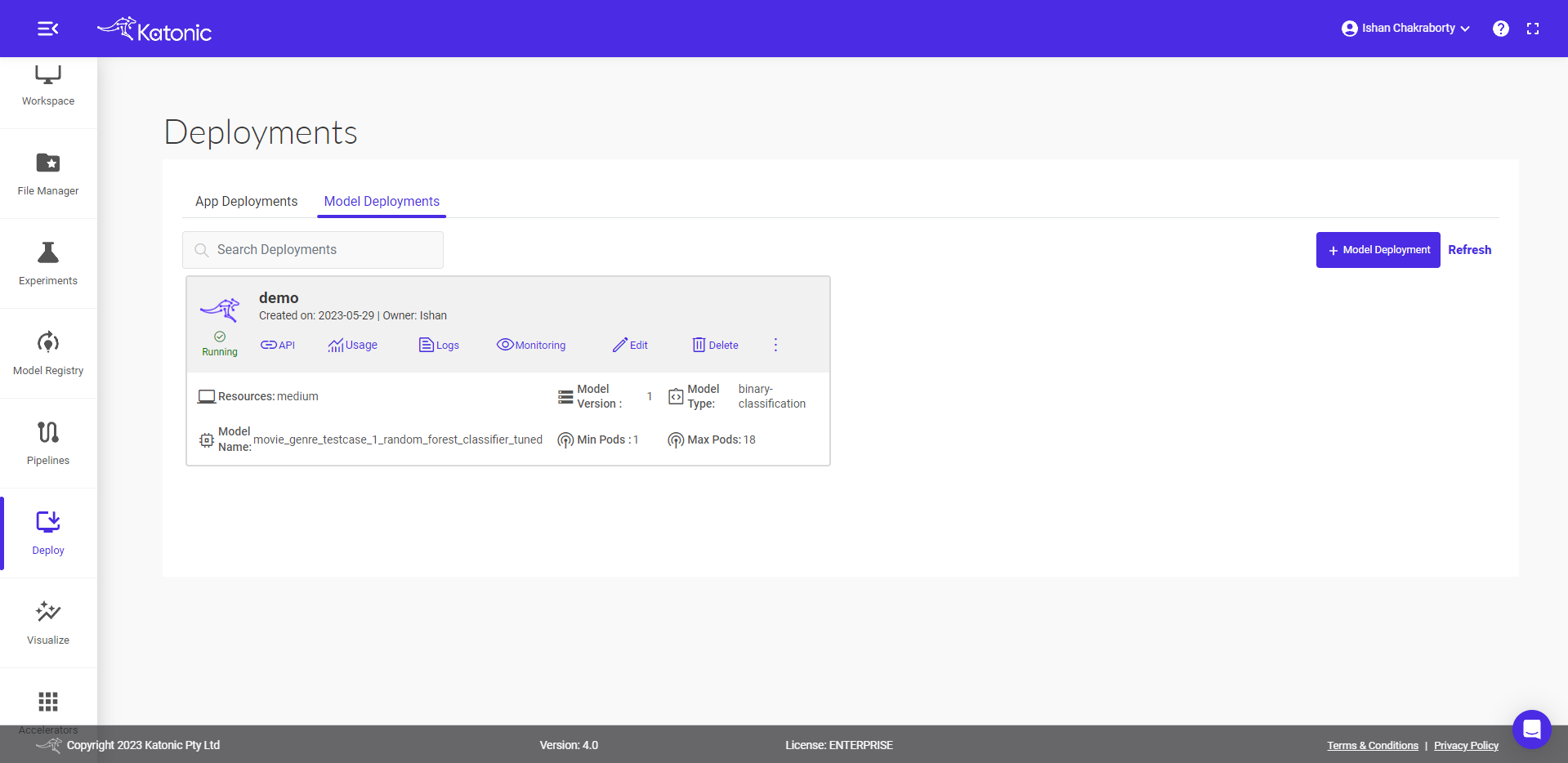

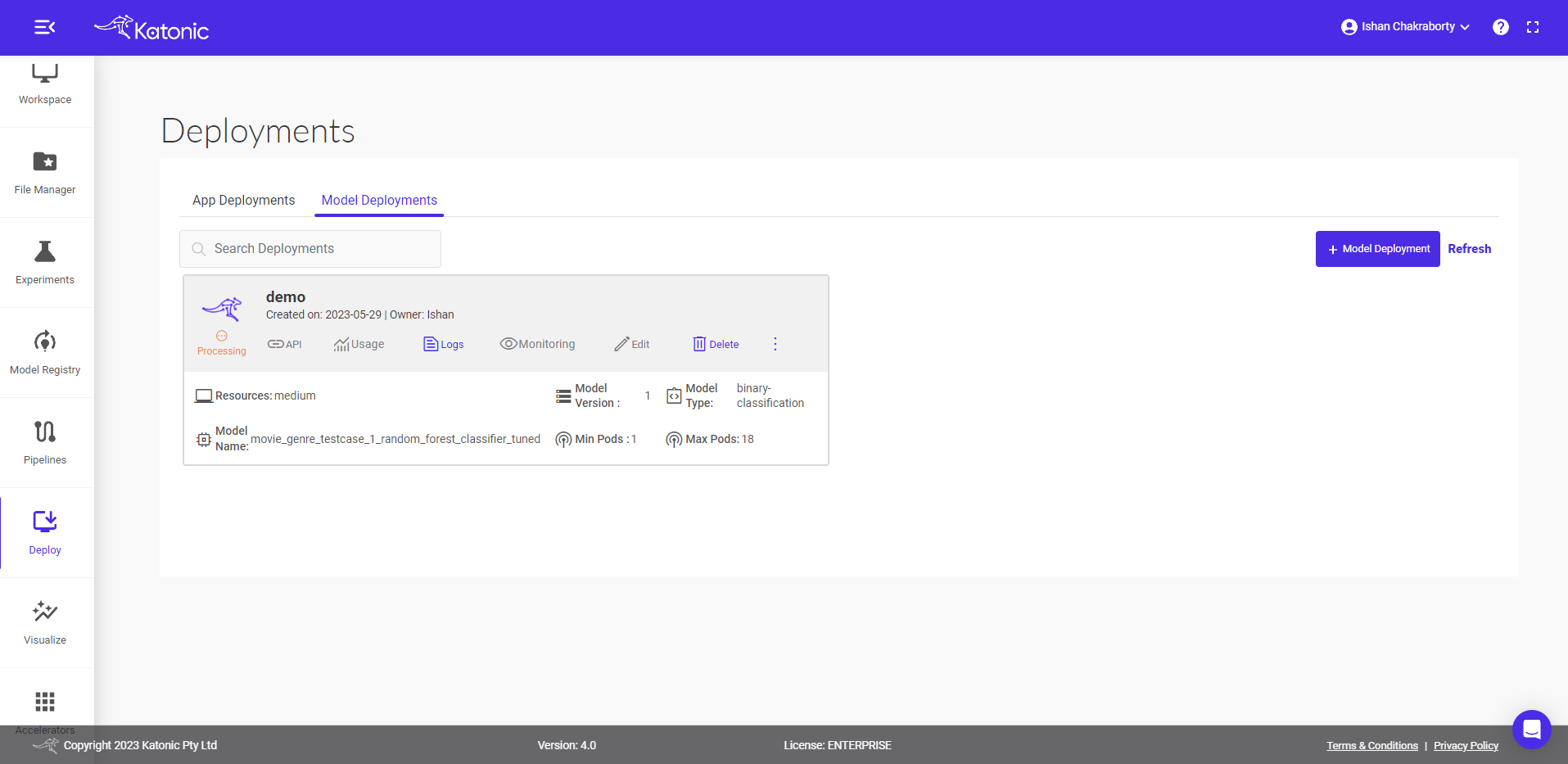

Once your Custom Model API is created you will be able to view it in the Deploy section where it will be in "Processing" state in the beginning. Click on Refresh to update the status.

- You can also check out the logs to see the progress of the current deployment using Logs option.

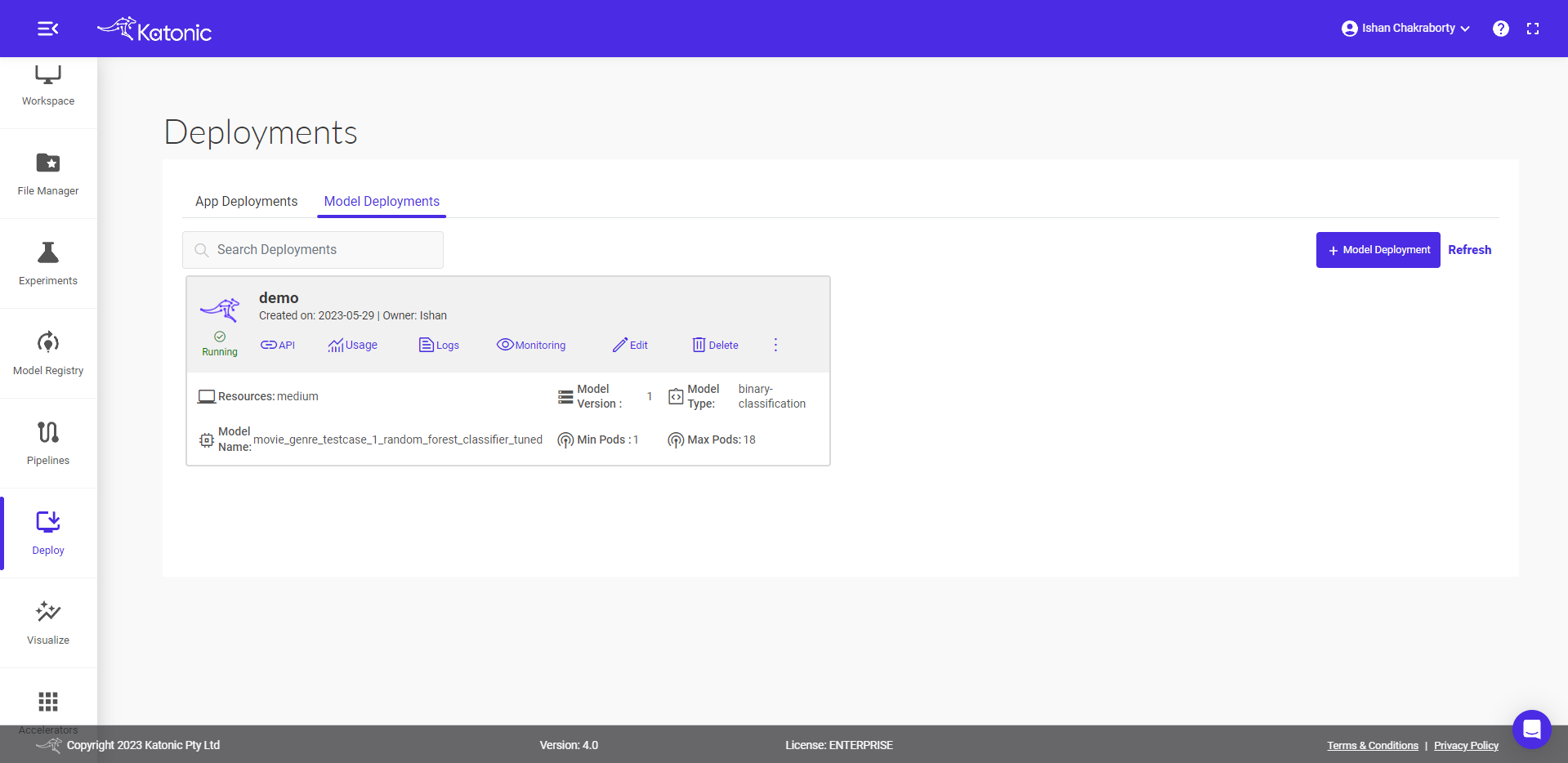

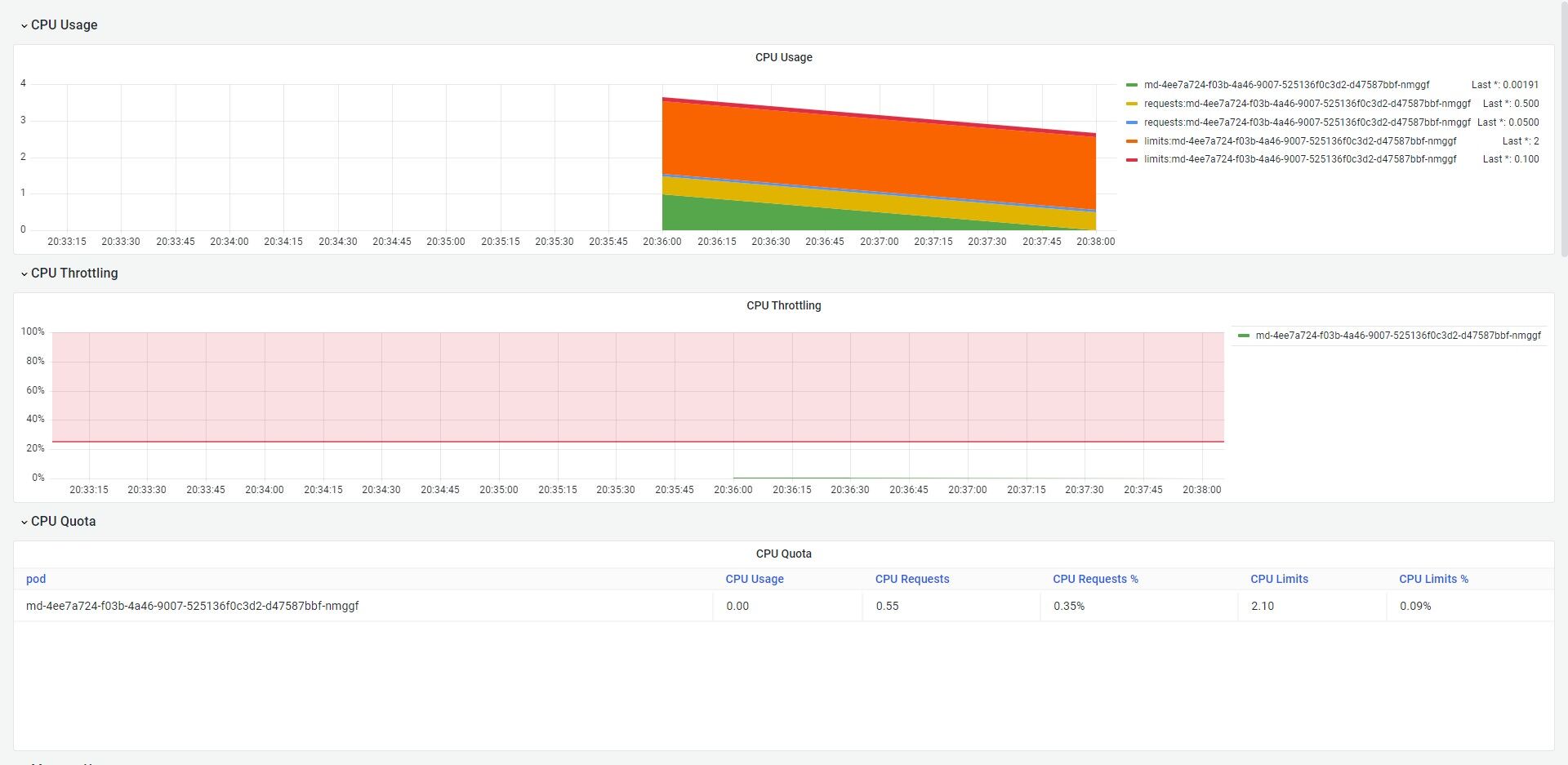

- Once your Model API is in the Running state you can check consumption of the hardware resources from Usage option.

API Usage

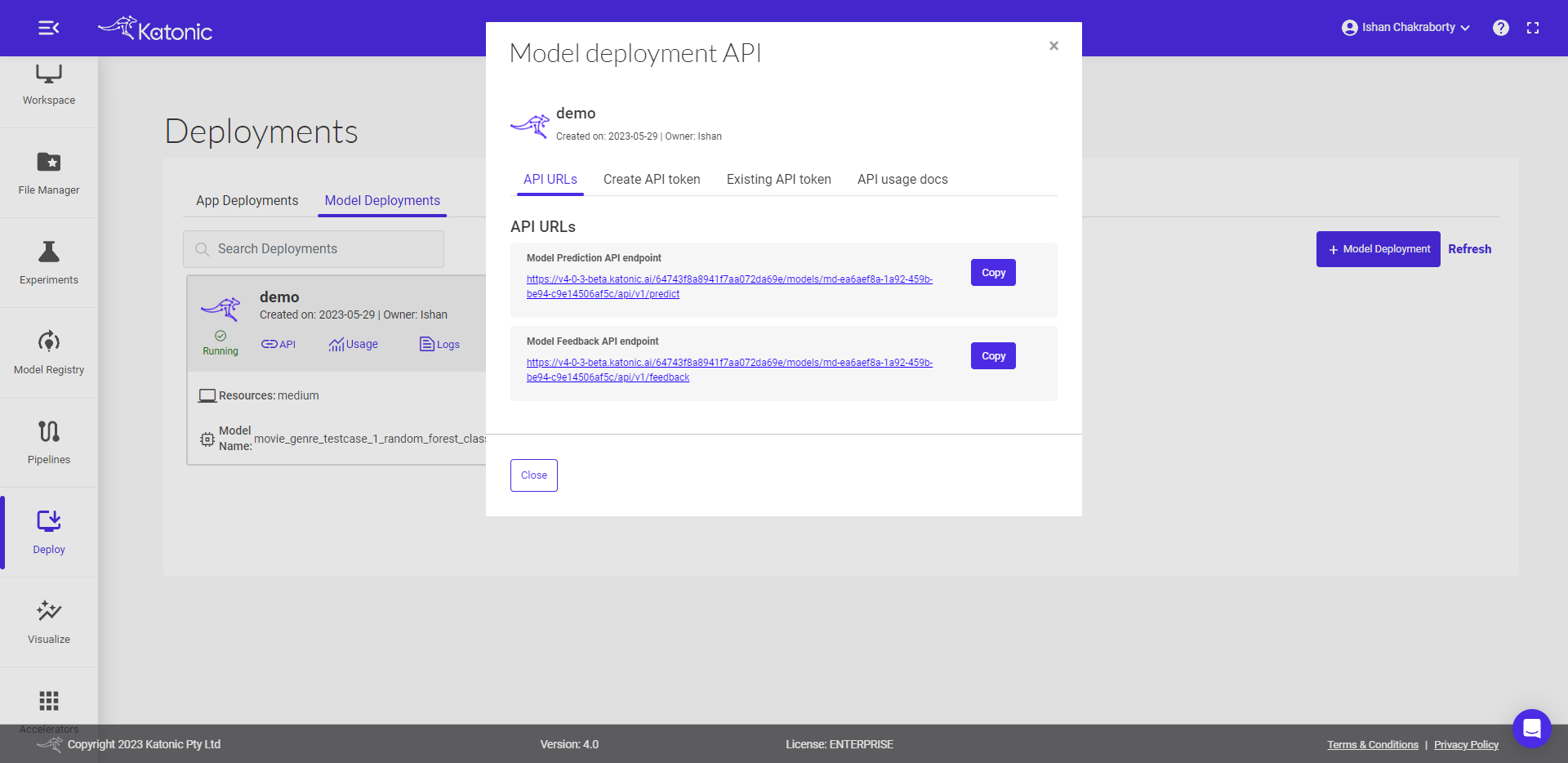

- You can access the API endpoints by clicking on API.

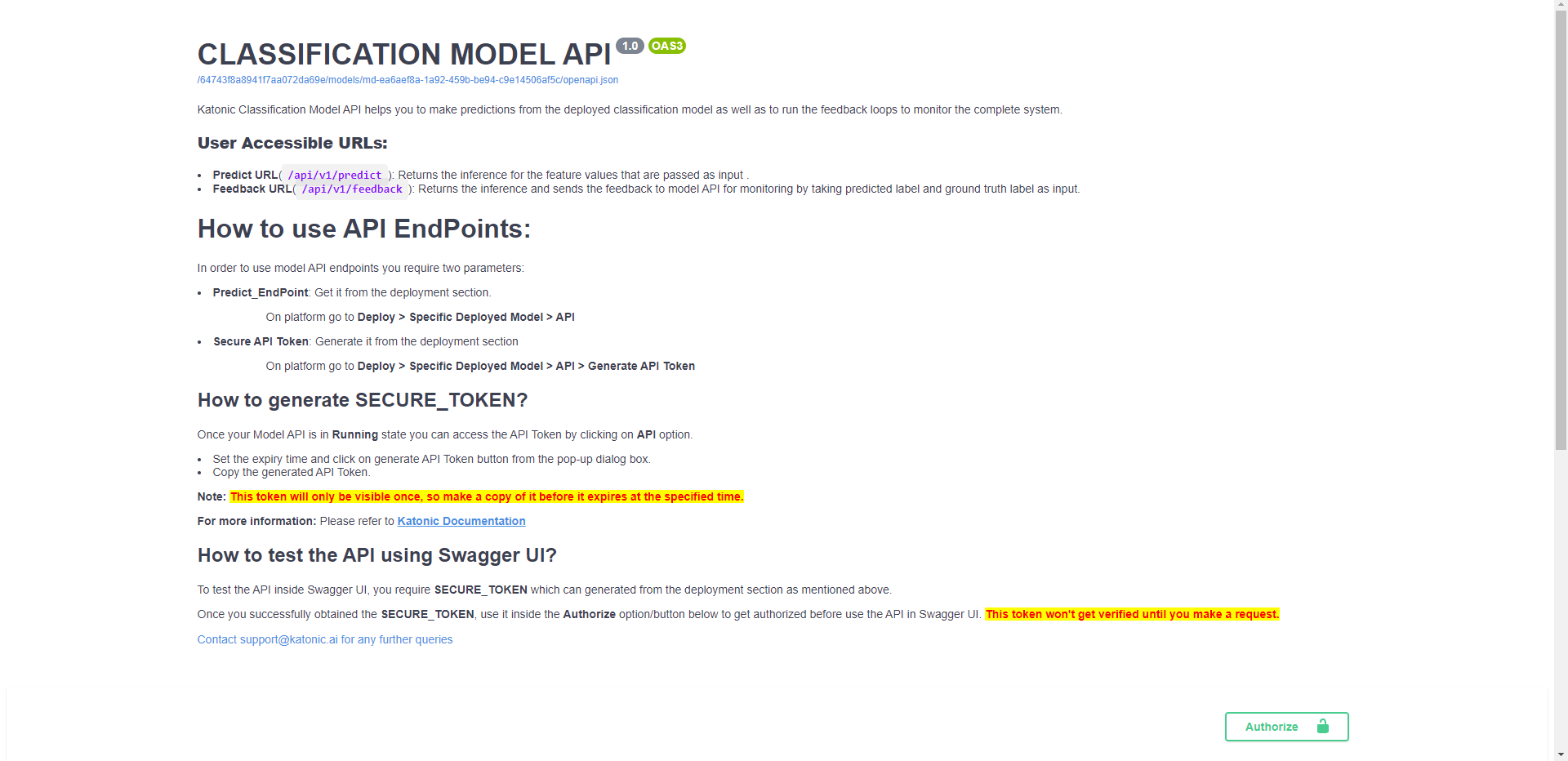

- There are two APIs under API URLs:

- Model Prediction API endpoint: This API is for generating the prediction from the deployed model Here is the code snippet to use the predict API:

MODEL_API_ENDPOINT = "Prediction API URL"

SECURE_TOKEN = "Token"

data = {"data": "Define the value format as per the schema file"}

result = requests.post(f"{MODEL_API_ENDPOINT}", json=data, verify=False, headers = {"Authorization": SECURE_TOKEN})

print(result.text)

- Model Feedback API endpoint: This API is for monitoring the model performance once you have the true labels available for the data. Here is the code snippet to use the feedback API. The predicted labels can be saved at the destination sources and once the true labels are available those can be passed to the feedback url to monitor the model continuously.

MODEL_FEEDBACK_ENDPOINT = "Feedback API URL"

SECURE_TOKEN = "Token"

true = "Pass the list of true labels"

pred = "Pass the list of predicted labels"

data = {"true_label": true, "predicted_label": pred}

result = requests.post(f"{MODEL_API_ENDPOINT}", json=data, verify=False, headers = {"Authorization": SECURE_TOKEN})

print(result.text)

Click on the Create API token to generate a new token in order to access the API

Give a name to the token.

Select the Expiration Time

Set the Token Expiry Date

Click on Create Token and generate your API Token from the pop-up dialog box.

Note: A maximum of 10 tokens can be generated for a model.Copy the API Token that was created. As it is only available once, be sure to save it.

Under the Existing API token section you can manage the generated token and can delete the no longer needed tokens.

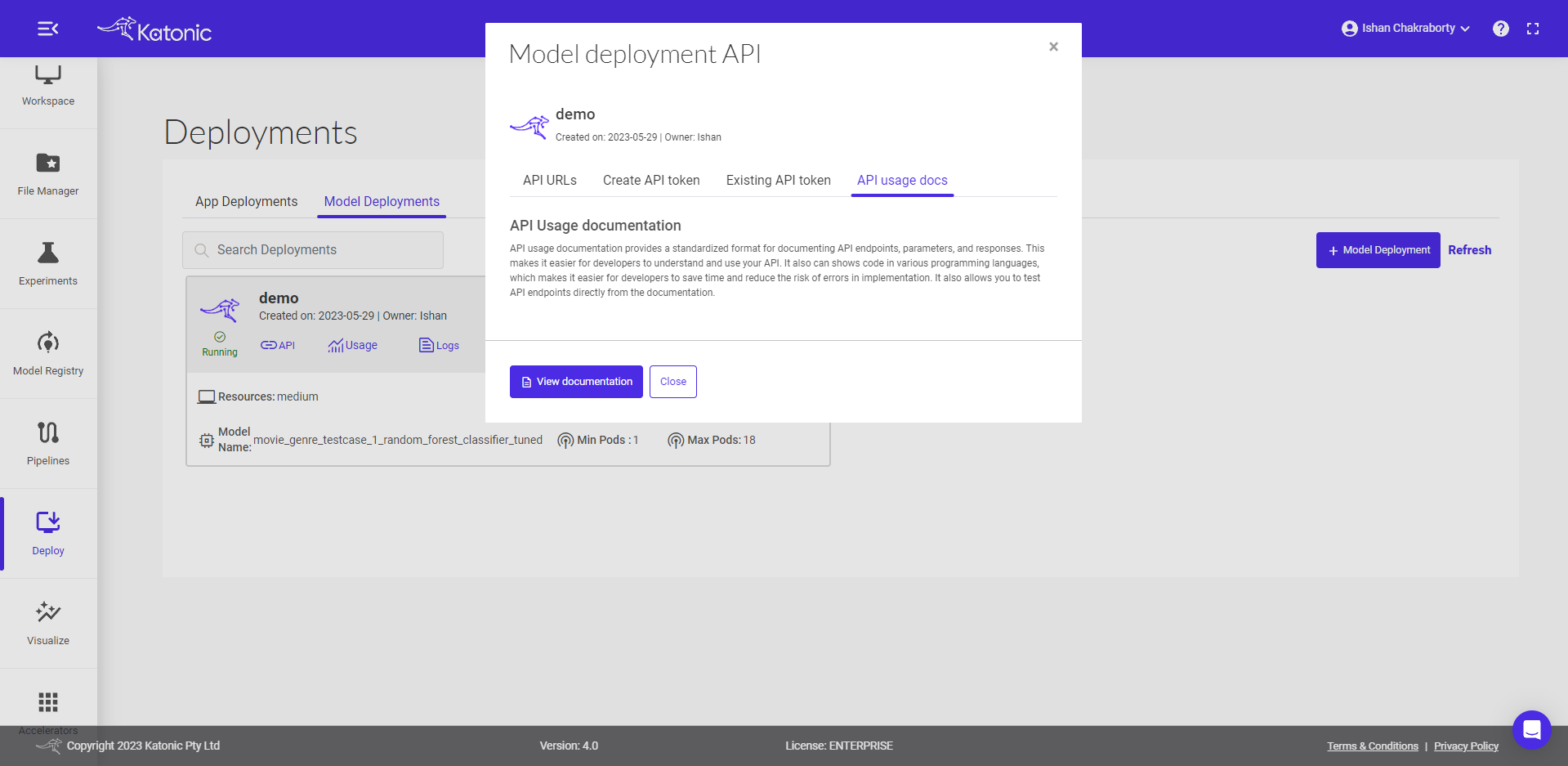

API usage docs briefs you on how to use the APIs and even gives the flexibility to conduct API testing.

To know more about the usage of generated API you can follow the below steps

- This is a guide on how to use the endpoint API. Here you can test the API with different inputs to check the working model.

- In order to test API you first need to Authorize yourself by adding the token as shown below. Click on Authorize and close the pop-up.

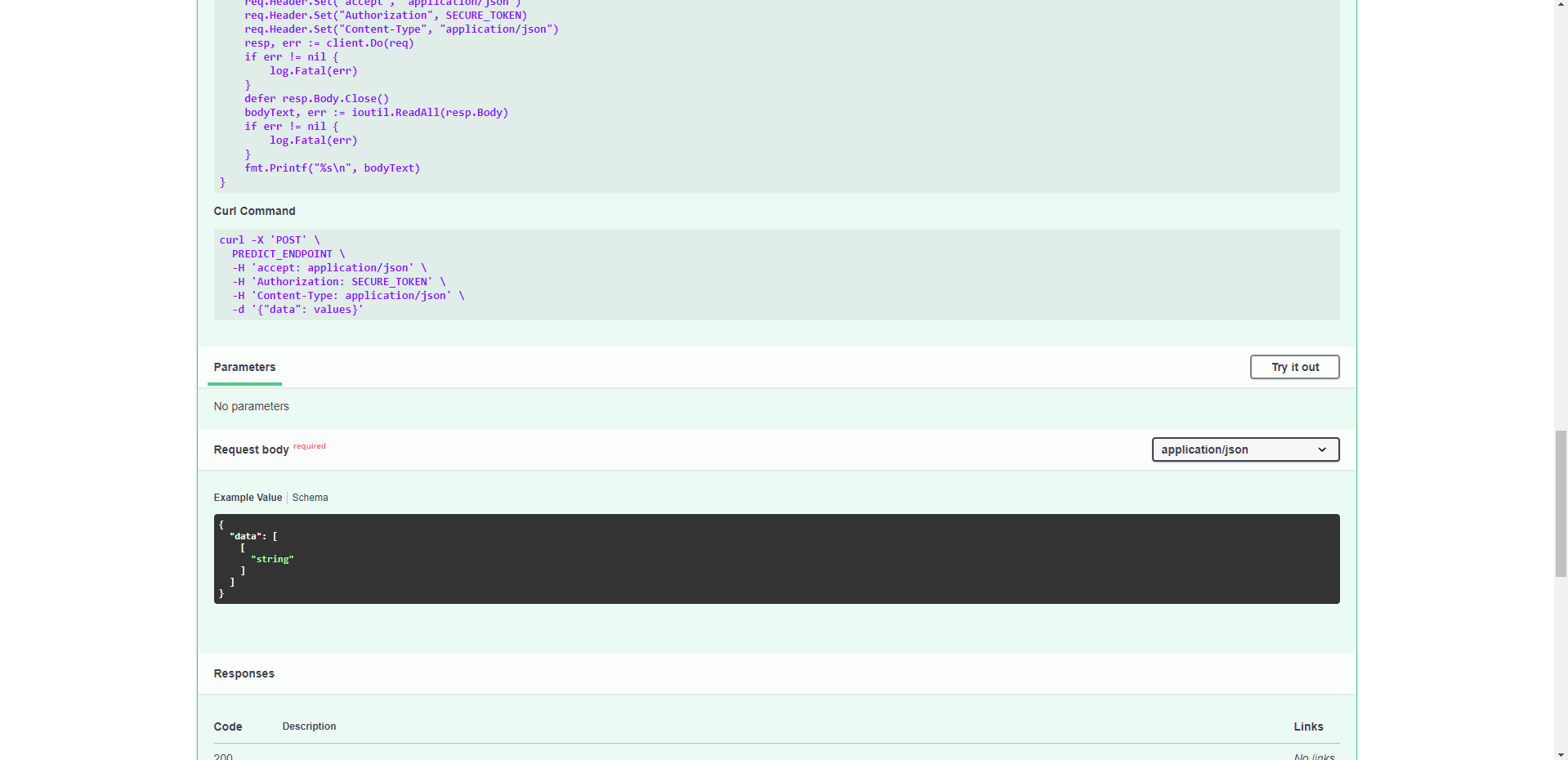

- Once it is authorise you can click on Predict_Endpoint bar and scroll down to Try it out.

- If you click on the Try it out button, the Request body panel will be available for editing. Put some input values for testing and the number of values/features in a record must be equal to the features you used while training the model.

- If you click on execute, you would be able to see the prediction results at the end. If there are any errors you can go back to the model card and check the error logs for further investigation.

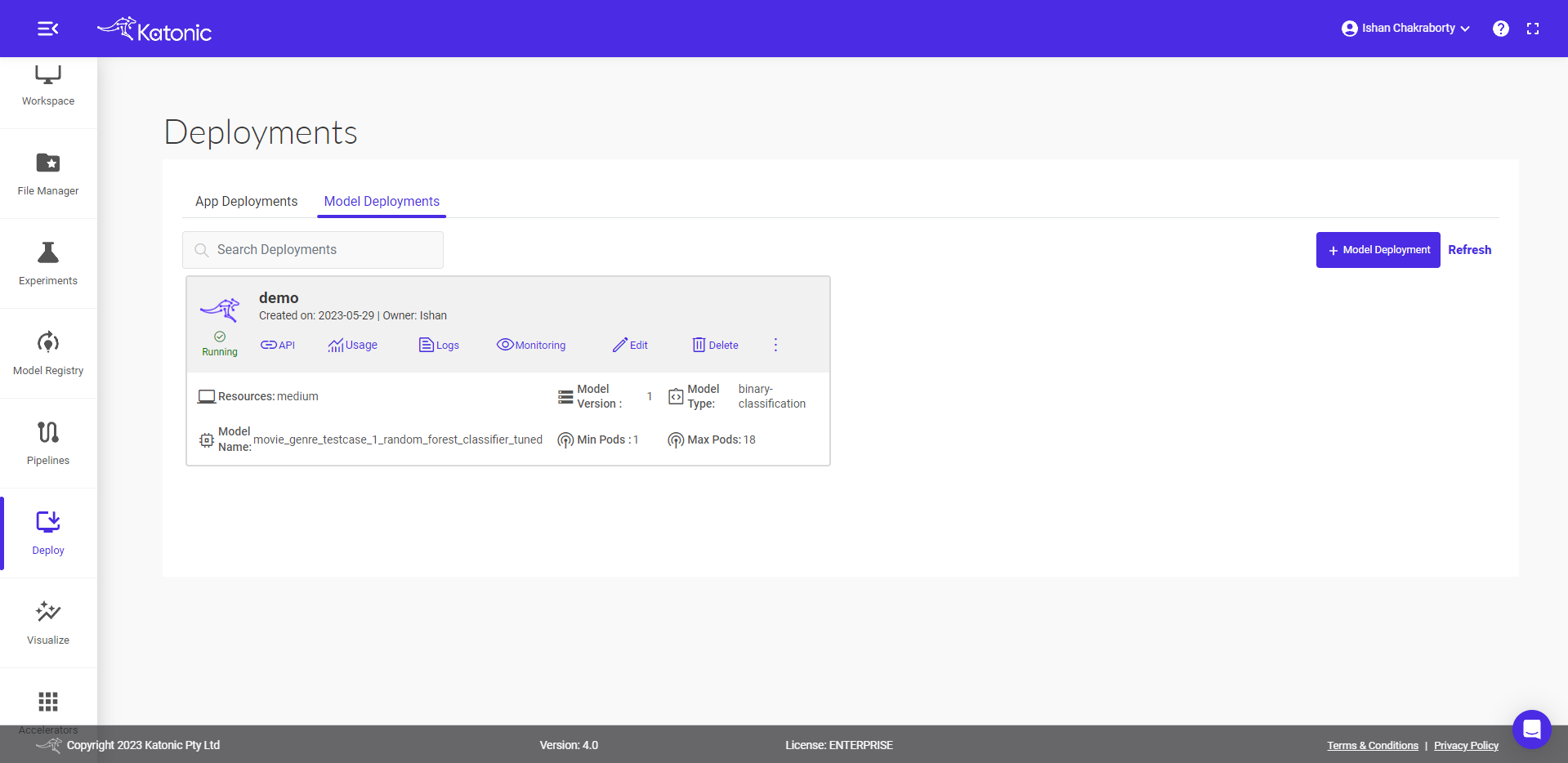

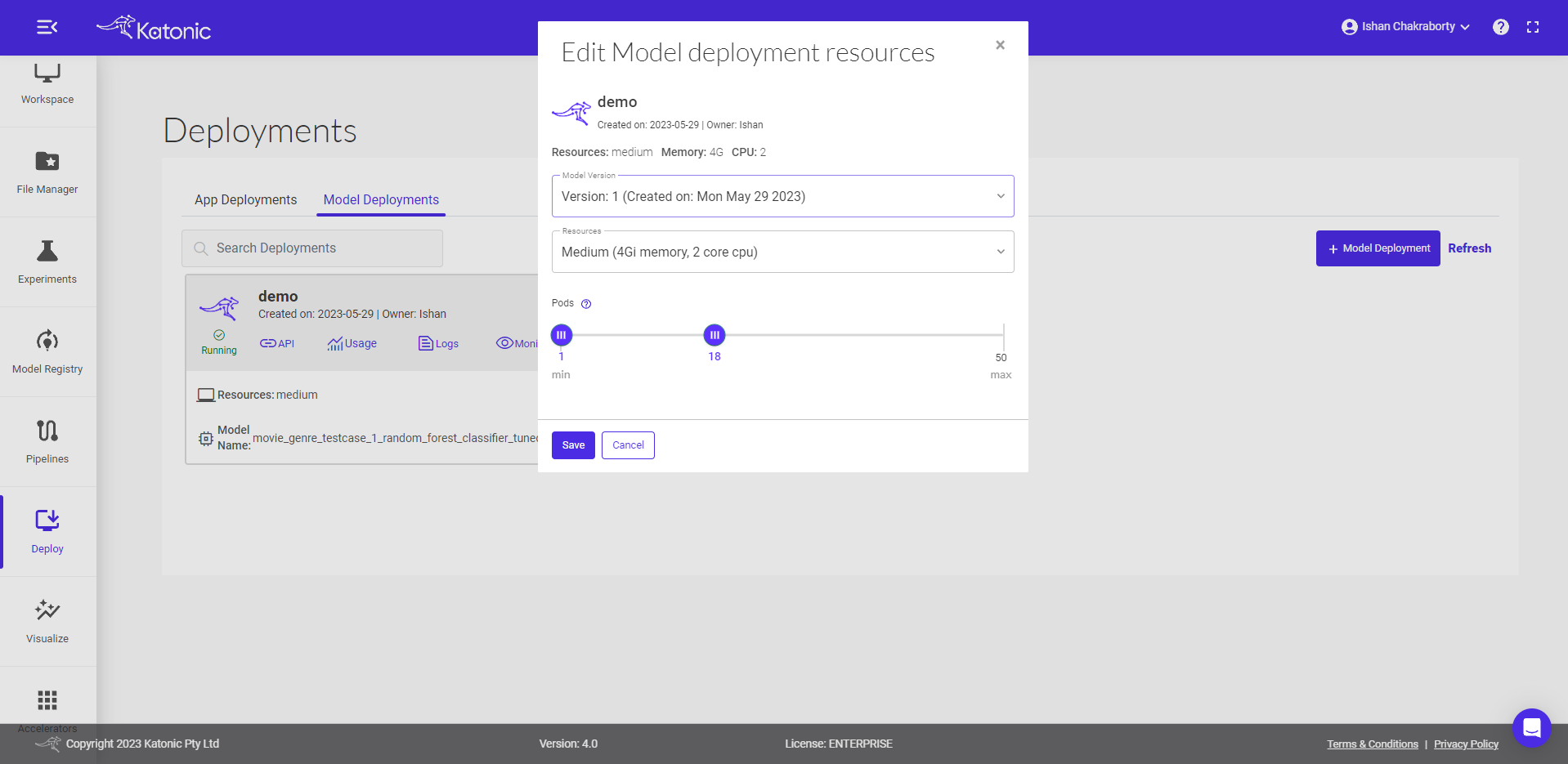

- You can also modify the resources,version minimum and maximum pods of your deployed model by clicking the Edit option and saving the updated configuration.

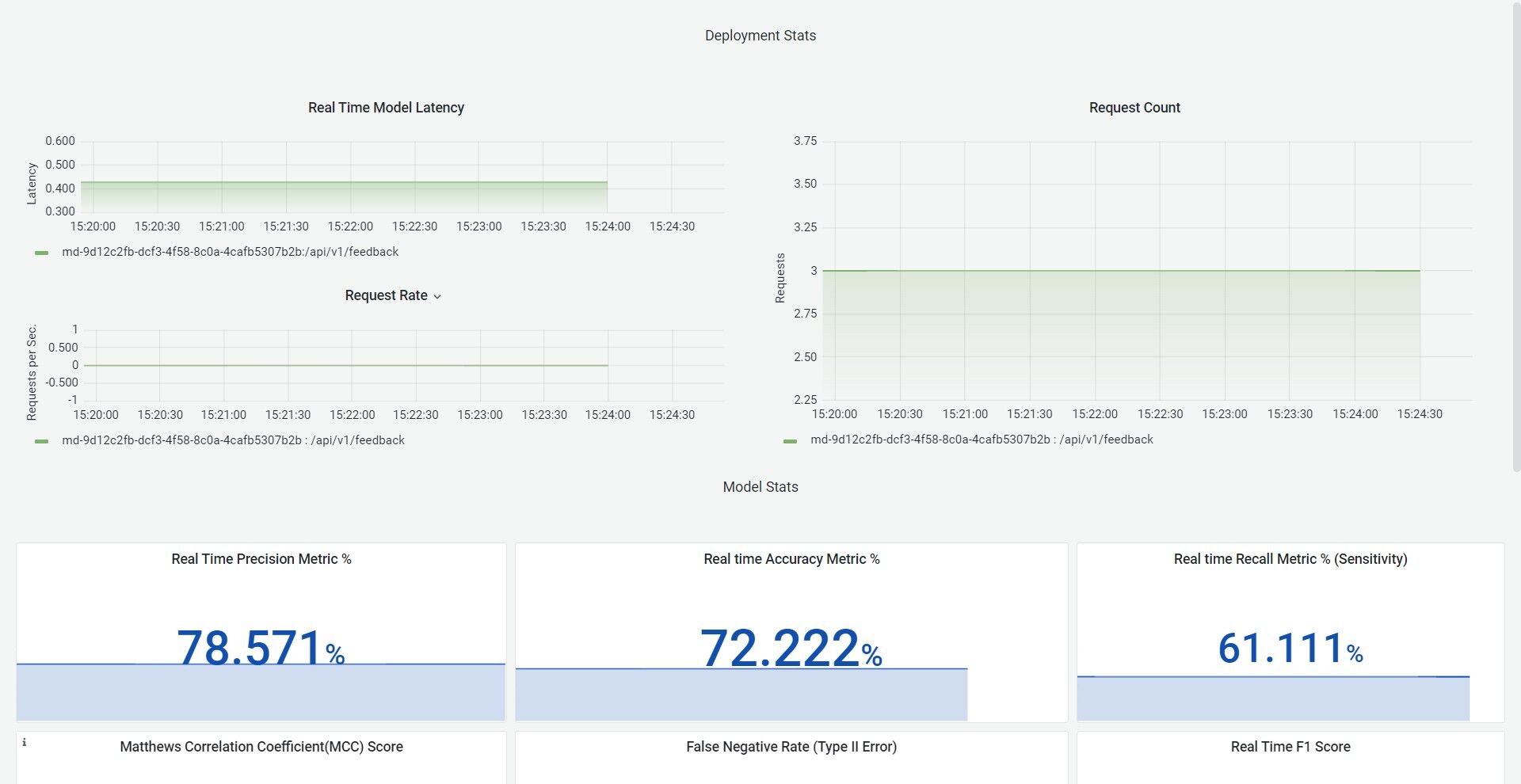

- Click on Monitoring, and a dashboard would open up in a new tab. This will help to monitor the effectiveness and efficiency of your deployed model. Refer the Model Monitoring section in the Documentation to know more about the metrics that are been monitored.

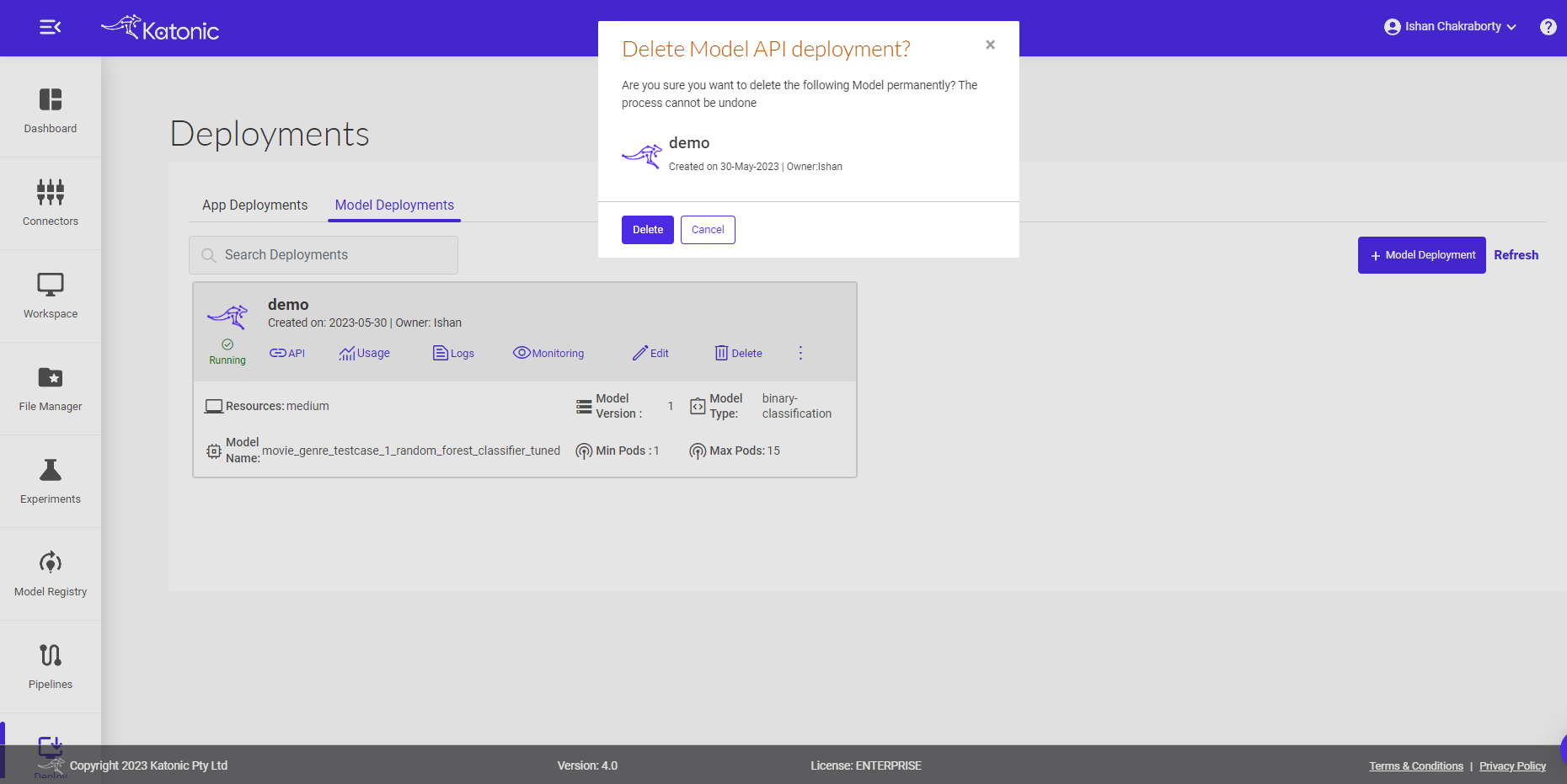

- To delete the unused models use the Delete button.