GCP Private Cluster

This guide describes how to install, operate, administer, and configure the Katonic Platform in your own GCP Kubernetes cluster. This content applies to Katonic users with self-installation licenses.

Hardware Configurations

This configuration is designed to offer high availability (HA) or performance testing. It is designed to achieve superior performance that enables real-time execution of analytics, machine learning (ML), and artificial intelligence (AI) applications in a production pipeline.

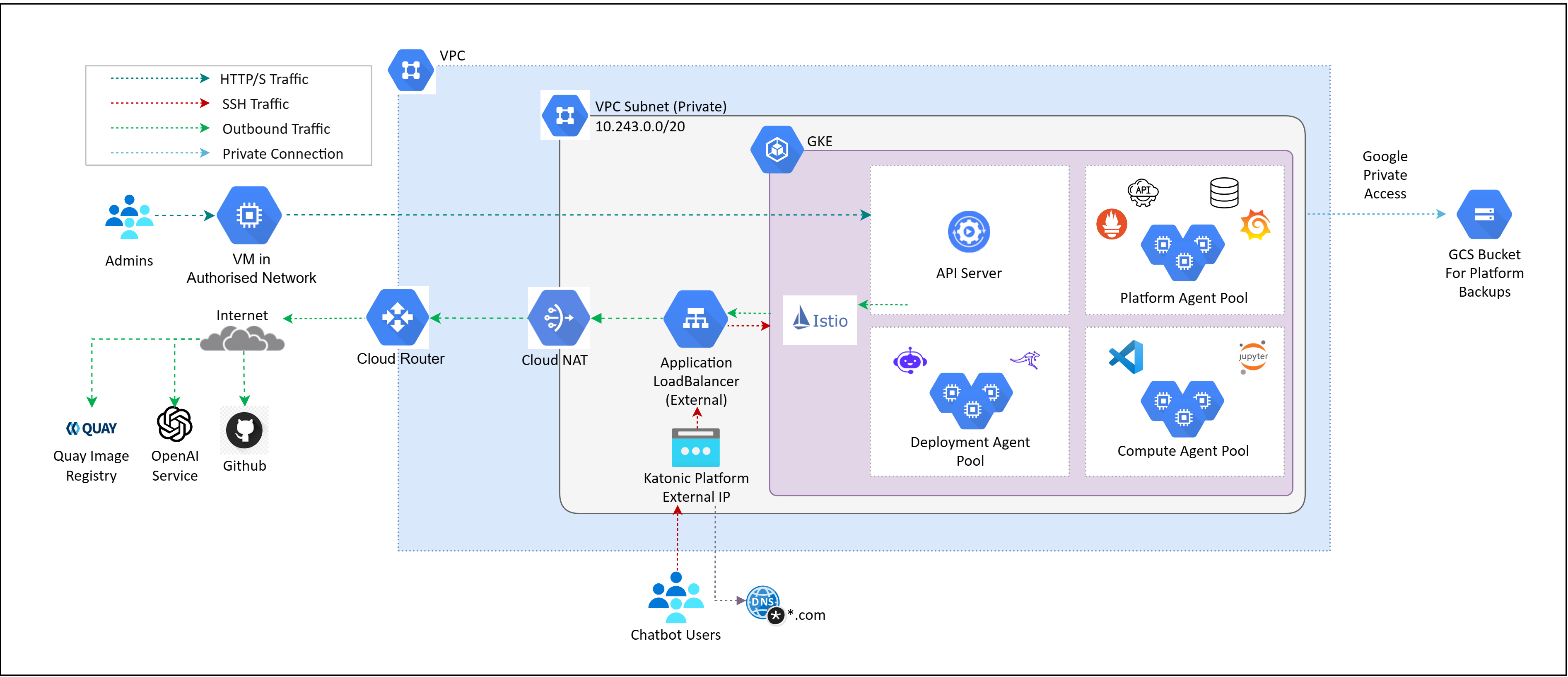

Katonic on GKE

Above given diagram depicts Katonic Platform on Private Cluster with External Application LoadBalancer, it is allocated a public IP address outside of VPC’s CIDR. This configuration facilitates external public access to the Katonic Platform, making it openly accessible over the public internet. It is important to remember that the Katonic Platform is housed within a private cluster, even if it is accessible from the outside. As a result, the underlying services and infrastructure are shielded from public internet access. External traffic enters through the external load balancer, which distributes it across the resources in the private cluster.

Katonic can run on a Kubernetes cluster provided by GCP Google Kubernetes Engine. When running on GKE, the Katonic architecture uses GCP resources to fulfill the Katonic MLOps platform requirements as follows:

Kubernetes control moves to the GKE control plane with managed Kubernetes masters

GCP GCS bucket is used to store entire platform backups.

The pd.csi.storage.gke.io provisioner is used to create persistent volumes for Katonic executions

Katonic cannot be installed on GCP GKE Autopilot.

Using GKE Node groups Katonic platform divides the compute and platform workloads on different set of machines.

Your annual Katonic license fee will not include any charges incurred from using GCP services. You can find detailed pricing information for the GCP services at Google Cloud Pricing Calculator

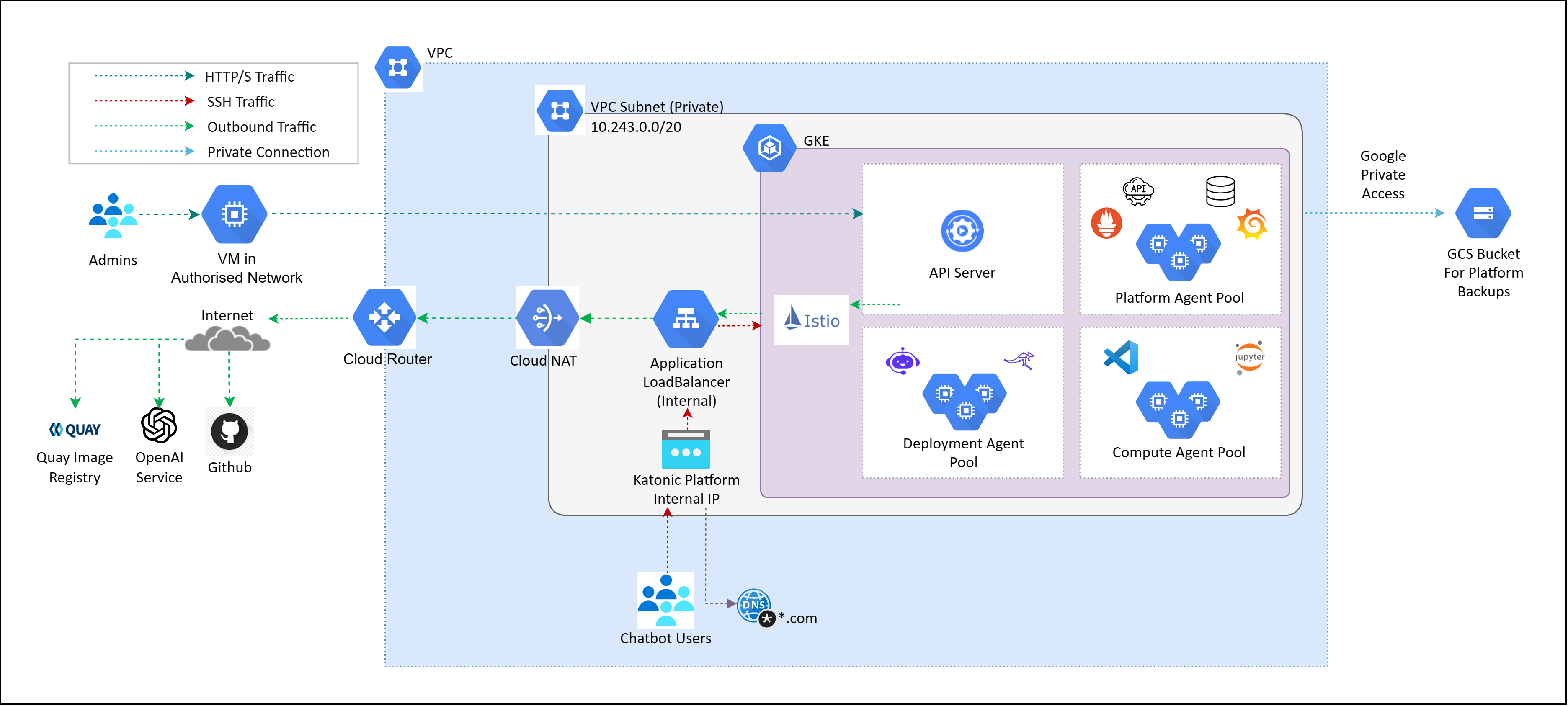

Architecture of Katonic Platform with Internal Application LoadBalancer on Private Cluster

Katonic Platform with Internal Application LoadBalancer is assigned a private IP address within the VPC's CIDR. This configuration restricts external public access to the Katonic Platform, ensuring that the platform is exclusively accessible from within the confines of the VPC, as displayed in above image. An internal ALB is used for routing traffic within a VPC and is not directly accessible from the internet.

Setting up a GKE cluster for Private Katonic Platform

Prerequisites required to be met to setup GKE:

VPC Subnet CIDR Notation:

- For Small Clusters: Use /26 CIDR notation, providing 64 IPs.

- For Larger Clusters: Consider /25 (128 IPs) or /24 (256 IPs).

- Balance current needs and future scalability when selecting the CIDR range.

Defining CIDR Range in GCP:

- Specify the chosen CIDR range during the creation of subnets in Google Cloud Platform (GCP).

Jump Host Machine for Access:

- Deploy a jump host machine with a public IP address.

- Whitelist the public IP of the jump host to ensure access to the GKE Private cluster for platform deployment.

Cloud NAT:

- Private networking is established by default in GKE clusters during creation.

- However, Cloud NAT is essential to enable outbound internet access. To ensure seamless cluster operations, including tasks like image pulling, without exposing public IPs.

By following these guidelines, you can create a well-structured VPC with appropriate CIDR notation, facilitate secure access through a jump host, and ensure necessary internet connectivity for GKE cluster operations using Cloud NAT.

This section describes how to configure a GCP GKE cluster for use with Katonic. When configuring a GKE cluster for Katonic, you must be familiar with the following GCP services:

Google Kubernetes Engine (GKE)

Identity and Access Management (IAM)

Virtual Private Cloud (VPC) Networking

Disks

GCP Filestore

Google Cloud Storage(GCS)

Additionally, a basic understanding of Kubernetes concepts like node pools, network CNI, storage classes, autoscaling, and Docker will be useful when deploying the cluster.

Service Account and Permissions

- During the GKE cluster installation process, create a dedicated service account.

- Attach the "Kubernetes Engine Node Service Agent" role to the service account. This role provides the minimal set of permissions required by a GKE node to support standard capabilities, including logging and monitoring export, as well as image pulls.

- Ensure to note down the identifier (ID) of the created service account.

- Incorporate the service account's ID into the configuration file katonic.yml as part of the setup process.

IAM Permissions for User

In order to complete the installation, the IAM user must have the following GCP permissions. These permissions include both GCP Managed Roles and Custom Managed Roles that need to be created and attached to the IAM user.

GCP Managed Roles:

- Compute Instance Admin (v1)

- Editor

- Kubernetes Engine Admin

- Project IAM Admin

- Storage Object Admin

GCP Custom Managed Roles:

These IAM Roles must be manually created in your GCP account and assigned to the appropriate IAM User.

- Custom Role Permissions

- iam.roles.create

- iam.roles.delete

- iam.roles.update

Service quotas

GCP maintains default service quotas for each of the services listed previously. You can check the default service quotas and manage your quotas by logging in to the GCP Quotas.

Create Google Kubernetes Engine (GKE)

By default Katonic installer create GKE cluster. If you are going to create GKE cluster then first create new separate VPC with 1 subnet and 2 zones and create GKE cluster in that VPC.

Dynamic block storage

The GKE cluster must be equipped with a Volume-backed storage class that Katonic will use to provision ephemeral volumes for user execution. Katonic installer create this storage class by default. If you are going to create cluster you need to create kfs storage class. Use the following for an example storage class specification YAML to create:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

components.gke.io/layer: addon

labels:

addonmanager.kubernetes.io/mode: EnsureExists

k8s-app: gcp-compute-persistent-disk-csi-driver

name: kfs

parameters:

type: pd-balanced

provisioner: pd.csi.storage.gke.io

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

allowVolumeExpansion: true

Dynamic shared storage

The GCP Filestore service must be provisioned and an access point must be configured to allow access from the GKE cluster. Katonic Installer has an optional parameter shared_storage.create to create GCP Filestore based storage class. If you are going to create cluster then you can create dynamic shared storage class by yourself using the following YAML:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

components.gke.io/component-name: filestorecsi

components.gke.io/component-version: 0.4.30

components.gke.io/layer: addon

labels:

addonmanager.kubernetes.io/mode: EnsureExists

k8s-app: gcp-filestore-csi-driver

name: kfs-shared

parameters:

tier: premium

provisioner: filestore.csi.storage.gke.io

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

GCS Bucket

This is used for taking backup of GKE cluster on GCS bucket. Katonic Installer has an optional parameter backup_enabled to create a GCS bucket and take backup. By default, backup is scheduled every 24hr and backup expires after 30 days. You can configure this setting in the katonic.yml template file.

GCP GKE Cluster Autoscaler

Katonic Installer has an optional parameter autoscaler.enabled to enable cluster autoscaler. If you are going to create GKE cluster then enable the GKE Cluster autoscaler and set Location policy to “Balanced" and use size limit type to "Total limits"

Note: If Cluster is deployed in 2 zones then specify the node locations.

Domain

Katonic must be configured to serve from a specific FQDN. To serve Katonic securely over HTTPS, you will also need an SSL certificate that covers the chosen name. Record the FQDN for use when installing Katonic.

Katonic offers the default option to use the .katonic.ai domain in all versions of the Katonic Platform. However, if you have your own domain, you can also utilize it across all versions provided by the Katonic Platform.

Resources Provisioned Post-Installation

When the platform is installed, it creates the following resources. Take this into account when selecting your installation configuration.

| Sr no. | Type | Amount | When | Notes |

|---|---|---|---|---|

| 1 | Load Balancer | 1 | Always | Only 1 is required. Automatically gets created by GKE when required. |

| 2 | Network interface | 1 per node | Always | |

| 3 | OS boot disk | 1 per node | Always | |

| 4 | Public IP address | 1 per node | The platform has public IP addresses. | |

| 5 | VPC | 1 | The platform is deployed to a new VPC. | |

| 6 | Filestore | 1 | For shared storage | |

| 7 | GKE Cluster | 1 | GKE is used as the application cluster | Version 1.27 |

Kubernetes(GKE) version

Katonic MLOps platform 4.4 version has been validated with Kubernetes(GKE) version 1.27 and above.

GCP GKE High Availability

When deploying a GKE (Google Kubernetes Engine) cluster on Google Cloud Platform, you have the flexibility to choose the level of high availability (HA) that suits your needs. The configuration you select will determine the number of nodes provisioned for your GKE cluster. By utilizing the min_count flag during the cluster setup, you can specify the desired number of nodes per zone.

High Availability (With HA) Deployment Option: When you select the High Availability (With HA) deployment option, your GKE cluster will be set up with two zones. GKE will distribute the nodes across these zones within your chosen region. This configuration ensures exceptional availability and fault tolerance for your cluster by guaranteeing that each zone contains at least one node. GKE will create a total of four nodes, with two nodes allocated to each of the two zones, if you set the minimum count to two.

Without High Availability (Without HA) Deployment Option: In the Without High Availability (Without HA) deployment option, your GKE cluster will utilize a single zone. All the nodes will be created within this zone. For instance, if you set the minimum count to two in a Without High Availability deployment, GKE will create a total of two nodes within the same zone.

By offering both High Availability and Without High Availability deployment options, GKE enables you to choose the level of fault tolerance and complexity that aligns with your specific requirements.

Data Visualisation

Katonic MLOps platform 4.4 include Superset Version 2.0.1 for Data Visualization.

You require an additional DNS if you're installing Superset.

Example:

- If your domain name to access platform is katonic.tesla.com.

- Then, the domain for data visualisation would look like dash-katonic.tesla.com.

Connectors

Katonic MLOps platform 4.4 include Airbyte Version 0.40.32 for Connectors.

You require an additional DNS if you're installing Airbyte.

Example:

- If your domain name to access platform is katonic.tesla.com.

- Then, the domain for connectors would look like connectors-katonic.tesla.com.

Katonic Platform Installation

Installation of the Katonic platform has been segmented based on product. When you click the link, you will be redirected to the installation process documentation.